GLM-5 Claims Open-Source Lead, Closing Gap on GPT-5.2

Z.ai, the developer platform from the Chinese lab Zhipu AI, has launched GLM-5, a new open model built for long, messy work instead of quick chat replies. If you have ever watched an AI start strong and then lose the plot halfway through a real task, this solves that. GLM-5 is meant to plan, use tools, and keep going until the deliverable is actually done.

In terms of scale and efficiency, GLM-5 jumps from 355B parameters to 744B, while the amount that actually activates per step rises from 32B to 40B. You get a much larger model, but it only “wakes up” the parts it needs for a given step, so serving it can stay within reach. They also raise pre-training from 23T to 28.5T tokens to keep long context.

In order to improve the training, Z.ai used reinforcement learning as the step that “turns competence into excellence” pointing to RL which is usually too slow to run at this size. Their answer is slime, an asynchronous setup that lets data collection and training run in parallel, so the model can learn from more attempts per day without waiting on itself. This helps agents to not break at small slips and pile up over many steps until the flow drifts.

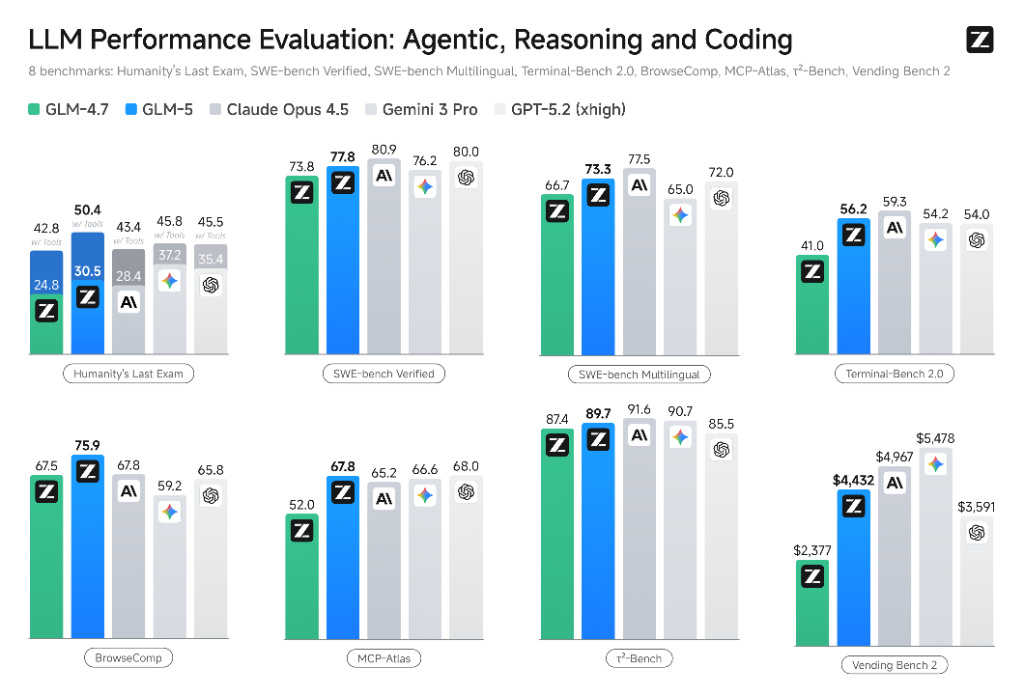

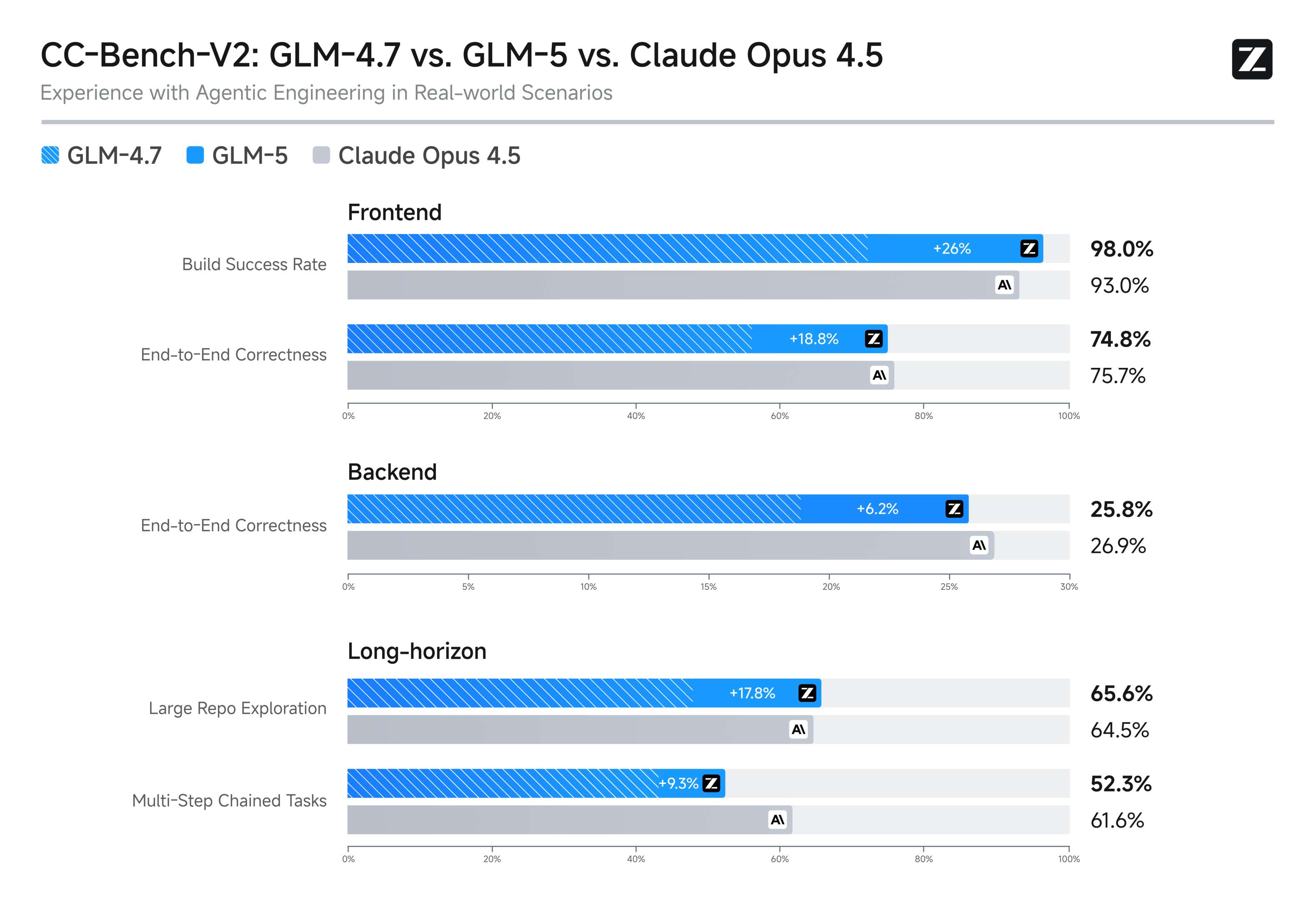

In the results they point to SWE-bench Verified (77.8) and Vending Bench 2 (about $4,432) as proof points for coding and long-horizon agent work, and they place GLM-5 on the same chart as frontier baselines like GPT-5.2, Gemini 3 Pro, and Claude Opus 4.5.

This supports their claim that it’s best-in-class among open-source models while narrowing the gap to top closed systems. Free tiers of ChatGPT and Claude usually come with tighter caps on usage, context, and tools, so this can feel better in day-to-day use as you hit fewer limits mid-task all with top-tier performance.

If you just want to use it, there is a chat mode and an agent mode, while the agent’s pitch says it can turn source material into real files like DOCX, PDF, and XLSX. If you want to deploy it, Nvidia’s NIM listing is the “easy button” route, and if you want to run it yourself, Z.ai points to common local serving paths like vLLM and SGLang.

In other words, this is not being shipped as a lab demo, it is being packaged like a model you can plug into a workflow and test it end-to-end according to your needs.

Y. Anush Reddy is a contributor to this blog.